This blog will explore the key differences between small language models (SLMs) and large language models (LLMs), focusing on how they’re built, their trade-offs in efficiency and resource consumption, the situations where one might be more appropriate than the other and what happens when models are combined.

In recent years, advancements in natural language processing (NLP) have revolutionised artificial intelligence (AI), with language models at the forefront of this transformation. Two primary types of models— large language models (LLMs) and small language models (SLMs) —have emerged, each tailored to different use cases based on their scale and application. While both process and generate human language, their distinct strengths and limitations make them better suited for different enterprise applications.

At their core, both LLMs and SLMs are machine learning systems designed to mimic human communication by comprehending, generating, and manipulating language. These models are trained on large datasets, allowing them to learn language patterns through statistical associations. Given an input—such as a question—they generate responses by predicting word sequences based on patterns they’ve learned from training data.

As businesses increasingly prioritise AI integration, leaders must understand the trade-offs between small and large language models. Choosing the right AI solution can enhance operational efficiency and drive revenue growth, depending on the organisation’s needs and resources.

This blog will explore the key differences between SLMs and LLMs, focusing on how they’re built, their trade-offs in efficiency and resource consumption, the situations where one might be more appropriate than the other and what happens when models are combined.

Model Size and Complexity

Each AI model contains a number of parameters which determine its size, complexity, and capability to capture patterns within the data. Models with larger parameters are more adept at understanding and modelling statistical relationships and complexities of data (1).

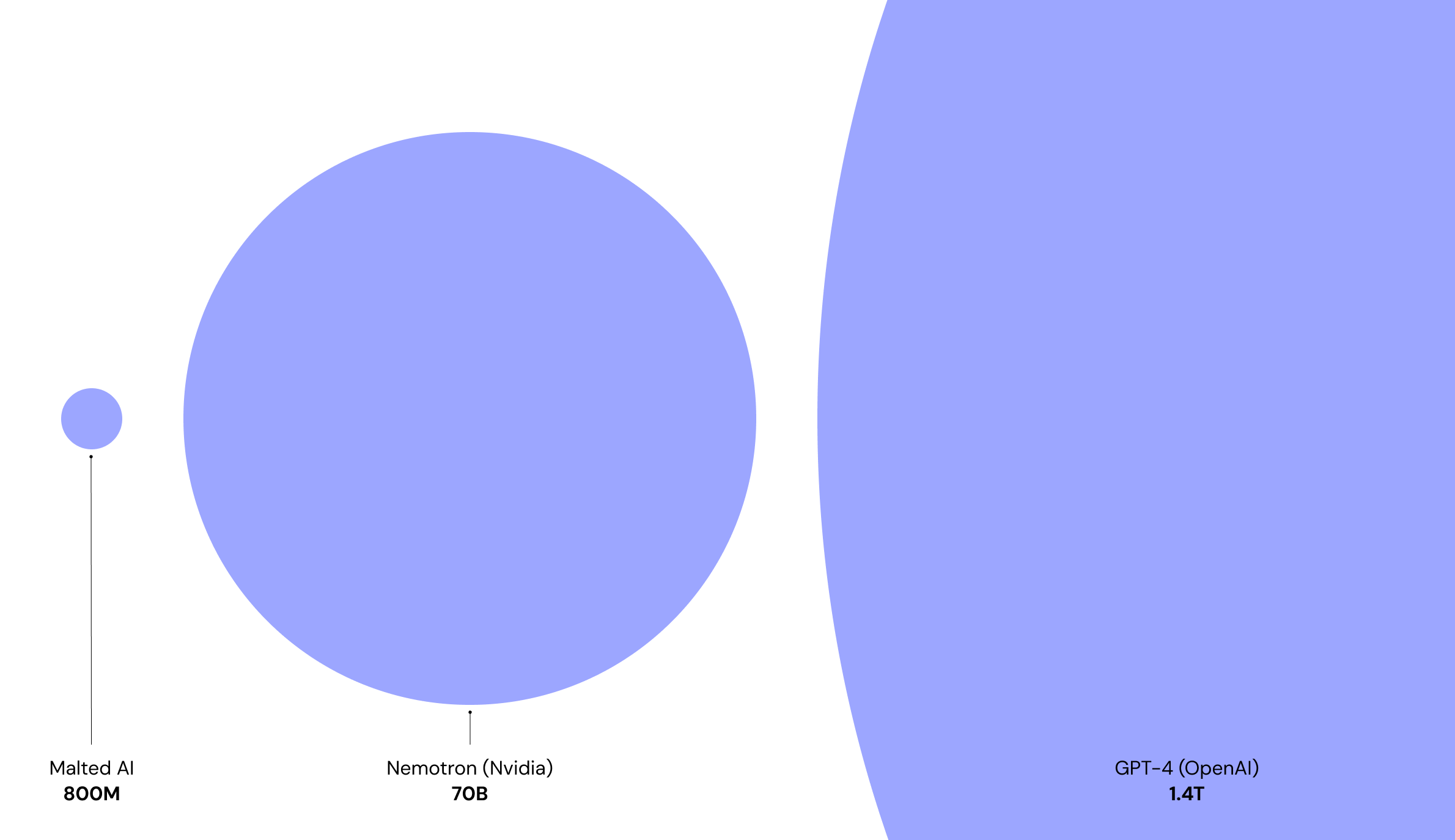

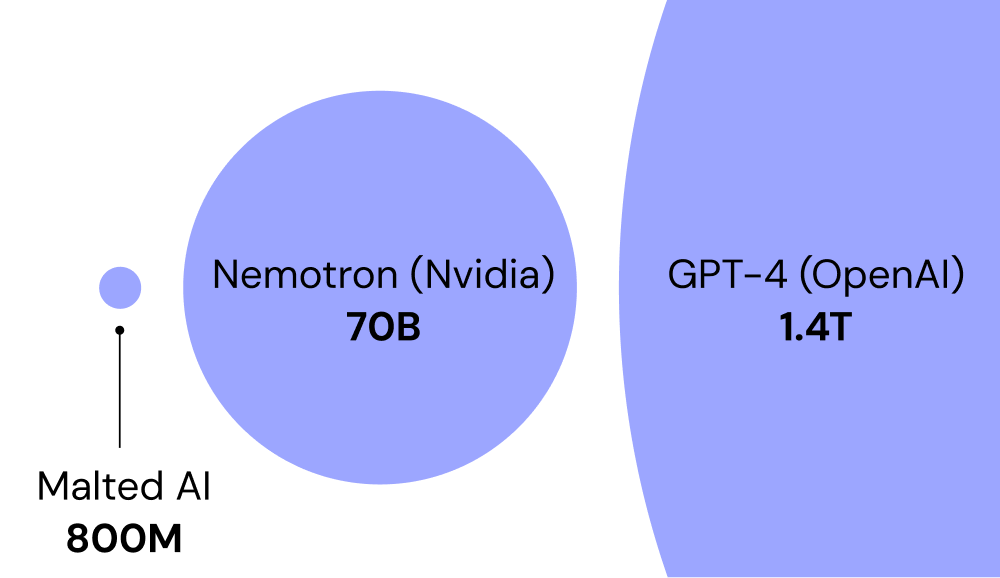

To simplify, parameters can be thought of as the measure of a model’s “size.” For instance, LLMs may contain over 100 billion, or even up to 1 trillion parameters, while smaller language models (SLMs) typically have between 100 million and 10 billion.

Efficiency, Speed and Cost

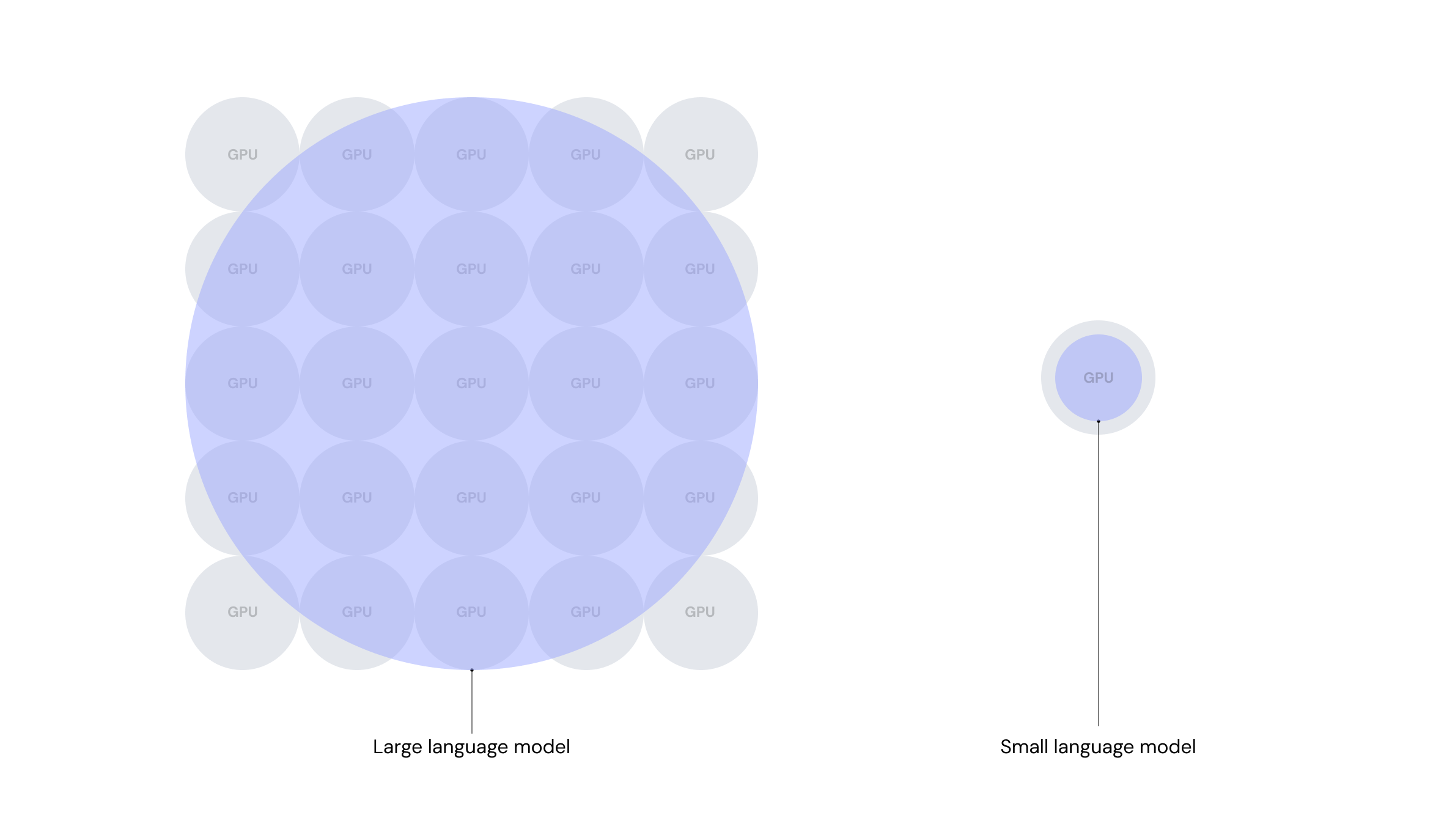

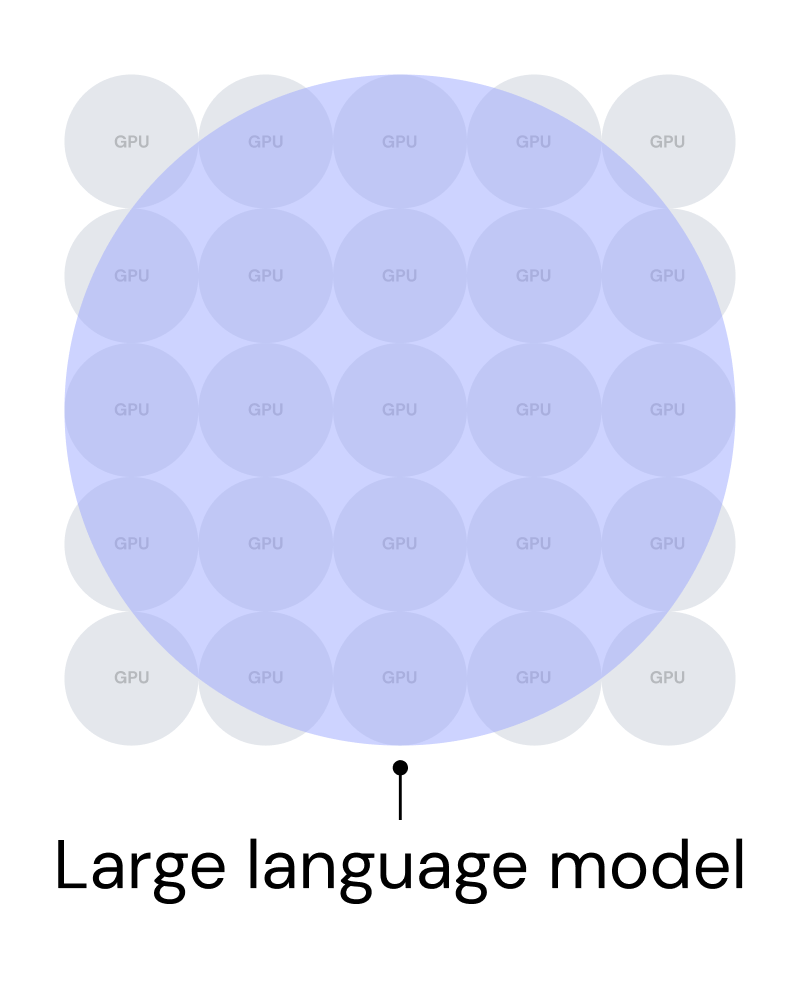

The number of parameters in a language model directly affects the computational power it requires, which is a key reason why running and maintaining LLMs can be expensive (2). LLMs typically need powerful clusters of GPUs, leading to costs that can reach millions of pounds annually. This makes them unaffordable for many use cases where such extensive computational power isn’t necessary.

In addition, the energy consumption required to train and maintain LLMs is another critical issue. The enormous computational resources that LLMs require translate into higher electricity usage and a larger carbon footprint (2).

In contrast, SLMs are much more cost-effective, often running on a single GPU at a fraction of the cost—typically just a few thousand pounds per month. For businesses aiming to optimise their AI investments, the cost savings of SLMs can be significant (3).

Efficiency is another critical consideration. LLMs, despite their impressive capabilities, tend to be slower due to the volume of information they process and the computational power they consume. This can result in delayed response times, which is particularly problematic for real-time applications or high-volume use cases. The need for extensive hardware to support LLMs can further compound these delays, especially when multiple users are interacting with a system simultaneously.

In contrast, SLMs offer a major advantage in terms of speed and efficiency. Their smaller size allows them to operate much faster, making them well-suited for environments with limited hardware capabilities. The reduced computational demand not only leads to quicker response times but also lowers infrastructure and maintenance costs. This makes SLMs an attractive option for businesses seeking faster, more affordable AI solutions without sacrificing too much functionality.

Training Data Requirements

LLMs present a unique set of training challenges. More precisely, effective training of an LLM typically requires vast amounts of diverse data, which is often gathered from large-scale web crawls and other extensive datasets (4). This is essential to ensure that LLMs can generalise effectively across various use cases and domains. On the other hand, SLMs are known for their efficiency and quicker training cycles. They require significantly less training data compared to LLMs, making them ideal for domain-specific use cases (5).

SLMs are an attractive option for organisations looking to implement machine learning solutions in specialised areas where data may be limited.

Generalists vs Task-Specific Experts

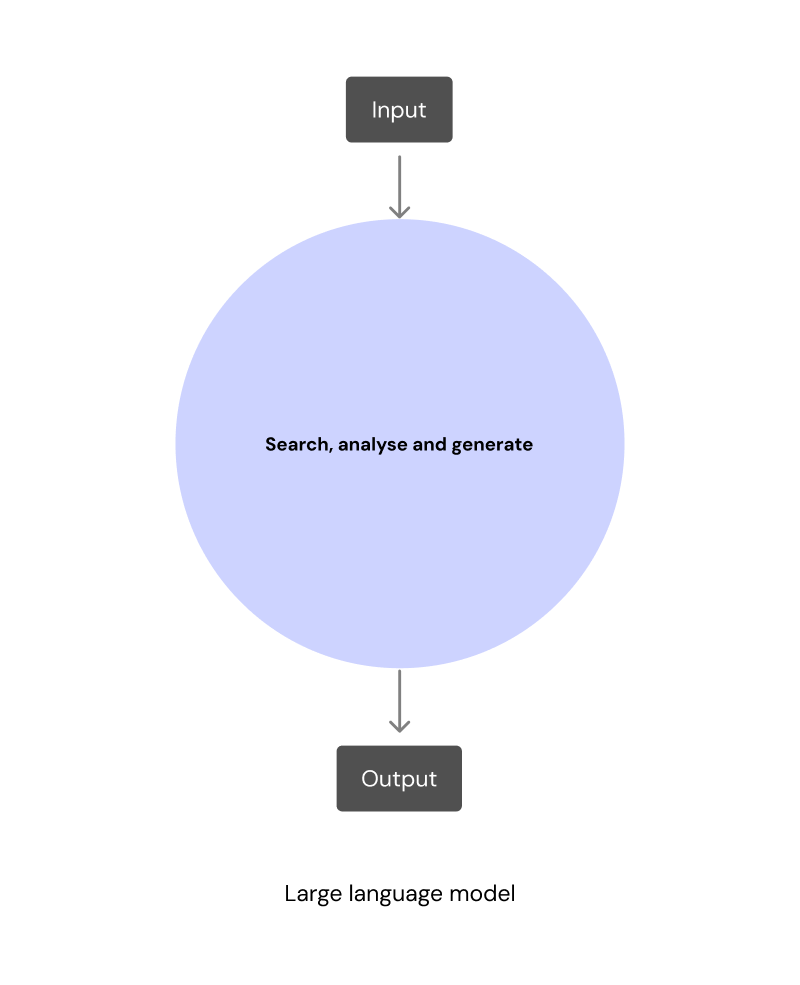

Although LLMs are designed to encompass a broad spectrum of general knowledge, they often struggle to provide precise, domain-specific outputs. This limitation occurs because LLMs are not trained on the specific data needed for expert responses in specialised fields. Therefore, their generalisation capabilities can limit their ability to deliver accurate insights tailored to bespoke applications.

In contrast, SLMs excel as experts within their respective domains. Their focused task-specific nature enables them to concentrate on specific types of data and knowledge, allowing them to handle targeted tasks with greater proficiency.

This distinction is essential for use cases seeking reliable answers in niche areas, as SLMs are better equipped to grasp and respond to the complexities of specialised subject matter (6, 7).

Privacy and Security

SLMs offer significant security advantages, primarily through their ability to operate directly on-prem. This on-prem processing eliminates the need for sensitive user data to be transmitted to external servers, a requirement often associated with LLMs (8).

By keeping data local, SLMs dramatically reduce the risk of data breaches, unauthorised access, potential misuse of personal information, and data leakage. This characteristic is especially vital in privacy-sensitive fields such as healthcare, finance, and banking, where the protection of user data is paramount.

With SLMs, organisations can ensure that their users’ data remains secure and private, mitigating concerns about data exposure.

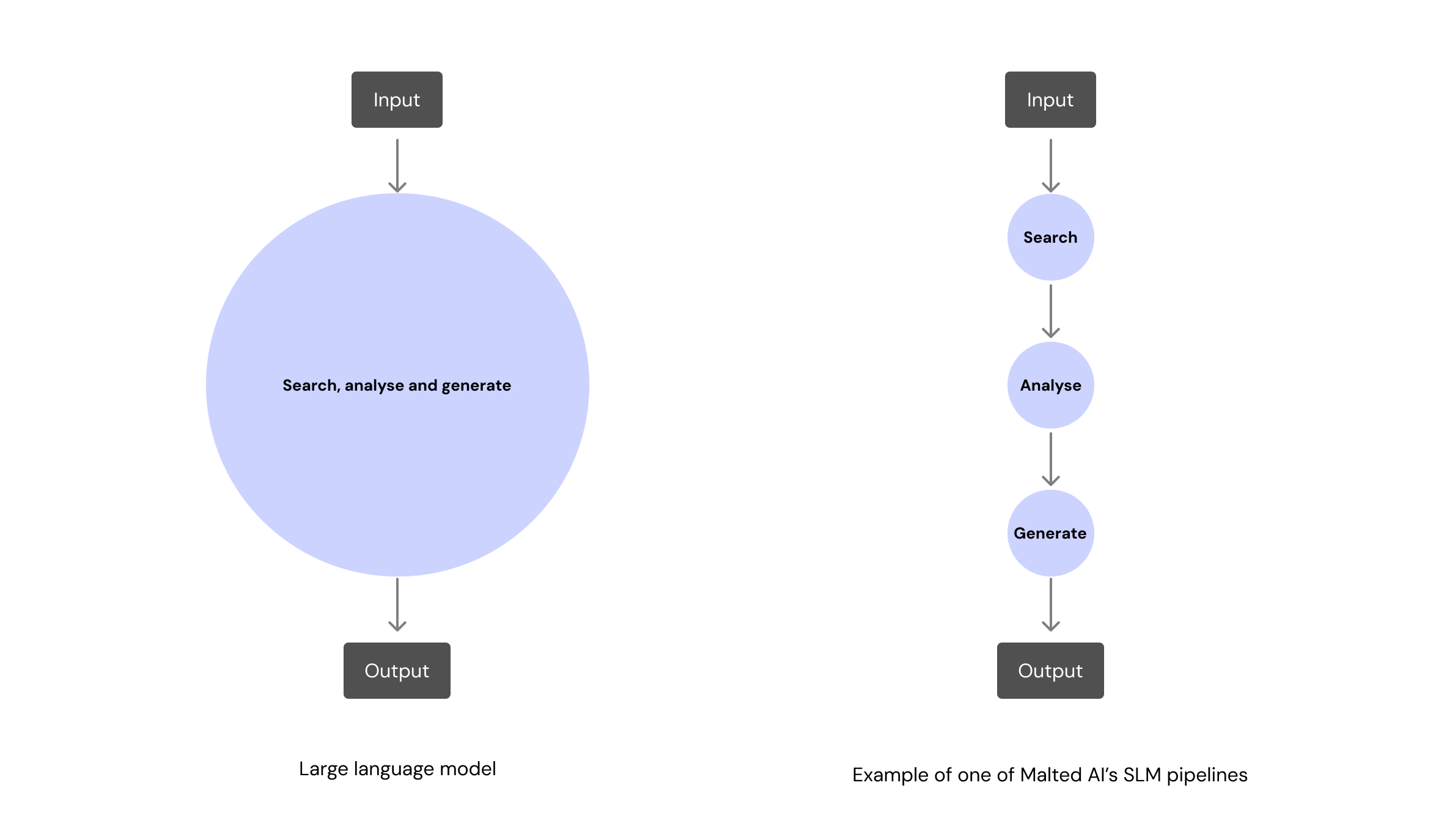

As described, both LLMs and SLMs have a role to play, depending on the use case. However, it is important to note that using a single model to solve all the use cases in an enterprise setting is insufficient. Below is an example that elaborates on the previous statement.

Let’s consider manufacturing a car. An LLM would be someone who knows a lot about cars. This person could build one that runs. However, because of their lack of in-depth experience, they probably wouldn’t do the best job. This person might choose paint that works but is not suitable for the material. The final product may lack the precision and quality expected of a top-notch automobile. Building a functional car is possible for this person, but optimal performance and durability might be missing.

On the other hand, SLMs can be compared to experts; following the car manufacturing example, this could refer to someone who knows very well how to paint a car or someone who is an expert in fitting the wheels. Because building a car is complex and has multiple steps, an expert SLM wouldn’t probably make a car, either, and if anything, might even do a worse job compared to the person who has general knowledge about cars.

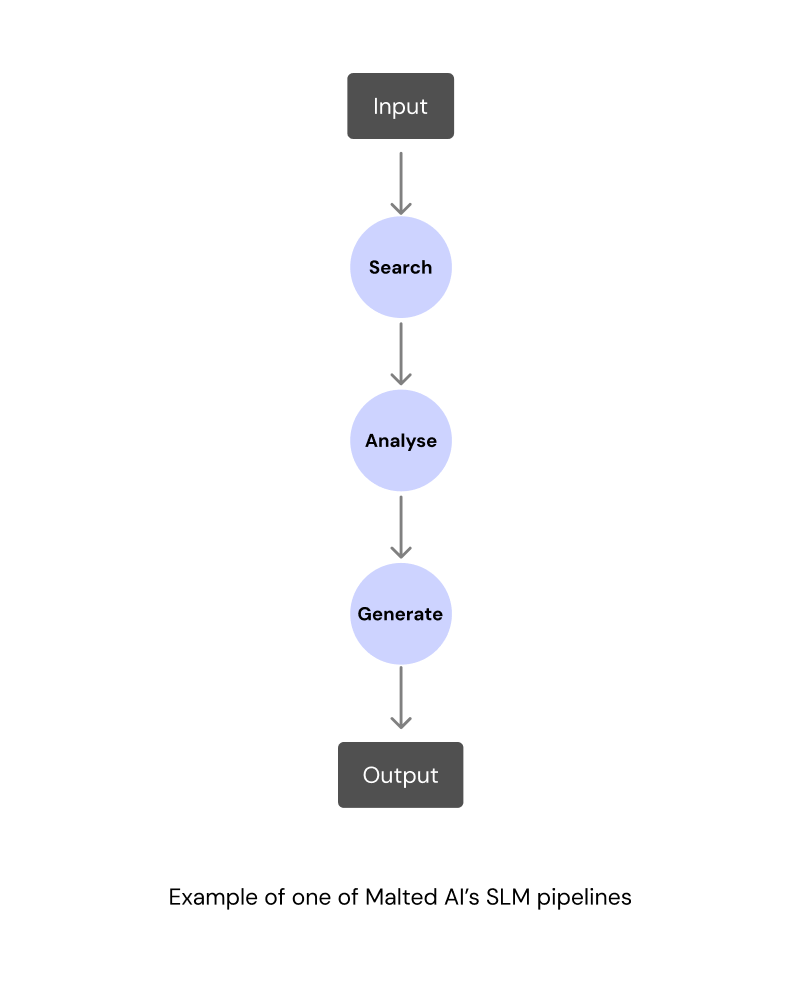

Here is where the concept of pipelines comes in. At Malted AI, we build pipelines of SLMs to solve complex multi-step use cases that require accuracy. Following the car manufacturing example, we assemble a team of expert SLMs to build a top-notch car.

Imagine one SLM programmed exclusively to handle wheels, ensuring each one is fitted to precise specifications. Another SLM specialises in selecting the correct type of paint based on the car’s material and intended environment. As mentioned before, due to the size of SLMs, we can deploy solutions at scale that perform better and are cost-effective.

We leverage the strengths of SLMs through a modular pipeline by breaking down complex problems into smaller, manageable components and create a ‘team’ of highly specialised expert SLMs.

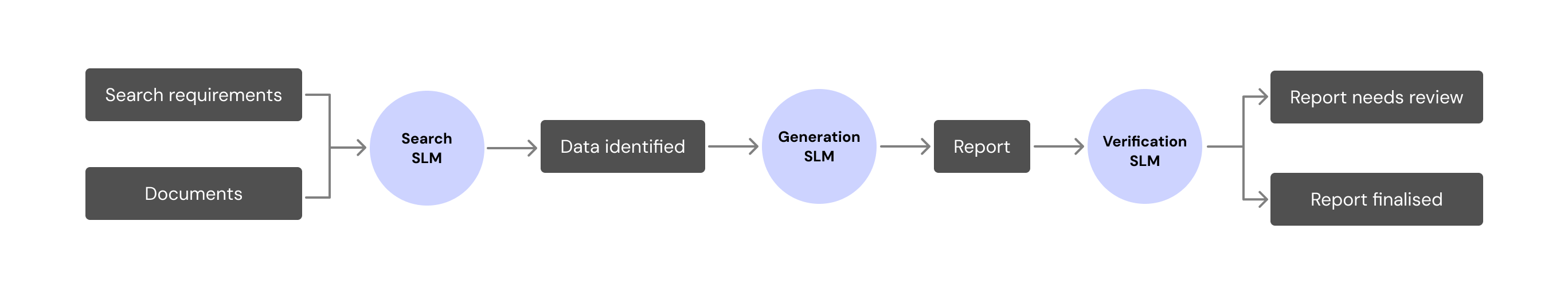

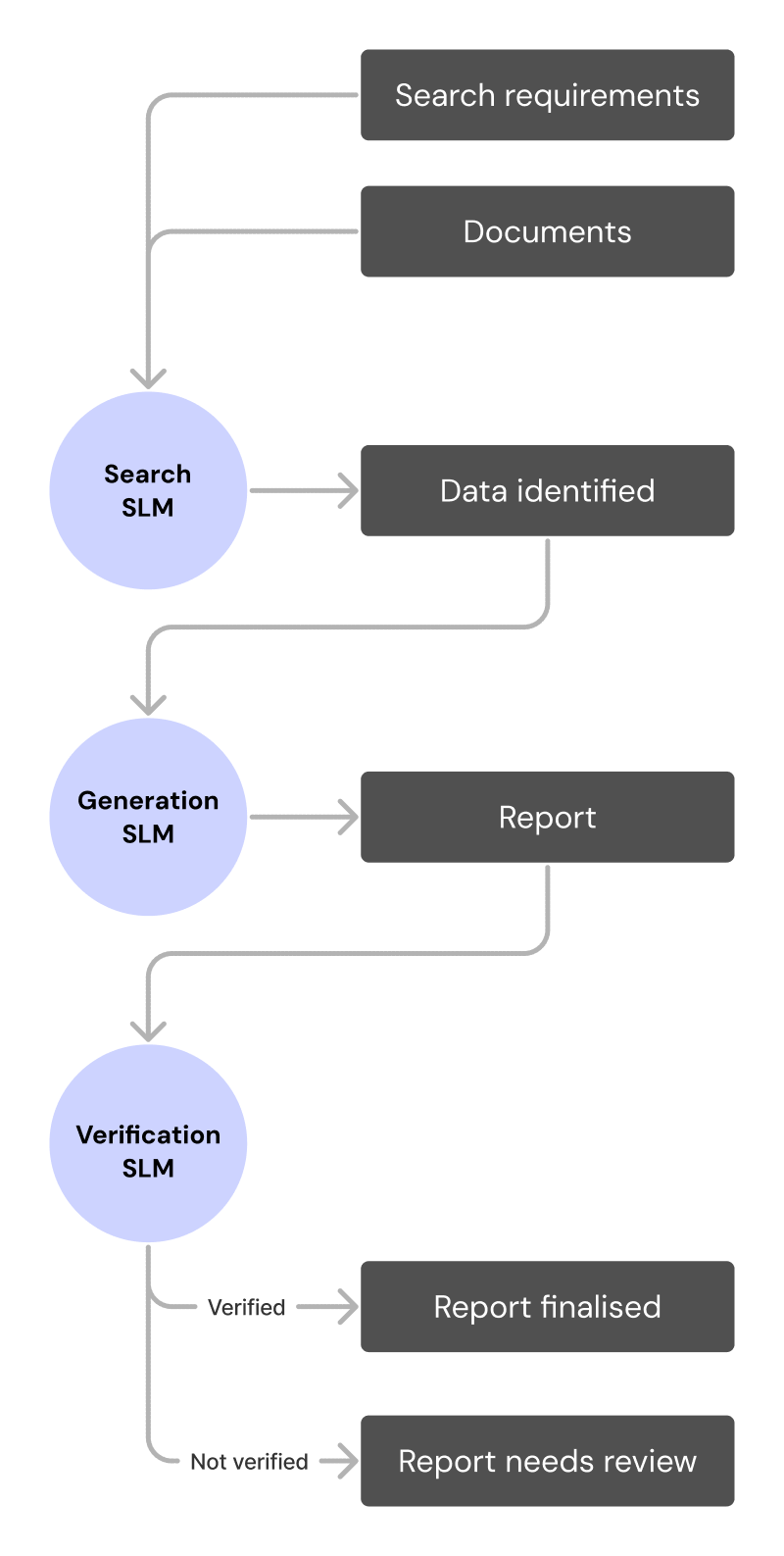

Taking the car manufacturing example to an enterprise use case, consider a scenario where the task involves searching through a collection of documents to gather information and compiling a report based on specific criteria. In this setup, a document and a set of criteria are provided to a search SLM, which finds the required information. A second SLM is then responsible for generating the report with the desired information obtained in the previous step. To increase the results of this process, a third SLM can be trained to verify steps 1 and 2.

Building pipelines allows us to optimise resource usage while maintaining high accuracy and performance. Each SLM in the pipeline is tasked with handling a distinct part of the process, ensuring the overall solution remains scalable and efficient.

If you are setting up an AI strategy in your organisation and would like to learn more about AI to make the best decisions, get in touch!